Workflows for Reproducibility

2025-09-08

Workflows

Data collection is only the start

Once the data are available from a study there are still a number of steps that must be undertaken to get them into shape for analysis.

One of the most misunderstood parts of the analysis process is the data preparation stage. To say that 70% of any analysis is spent on the data management stage is not an understatement.

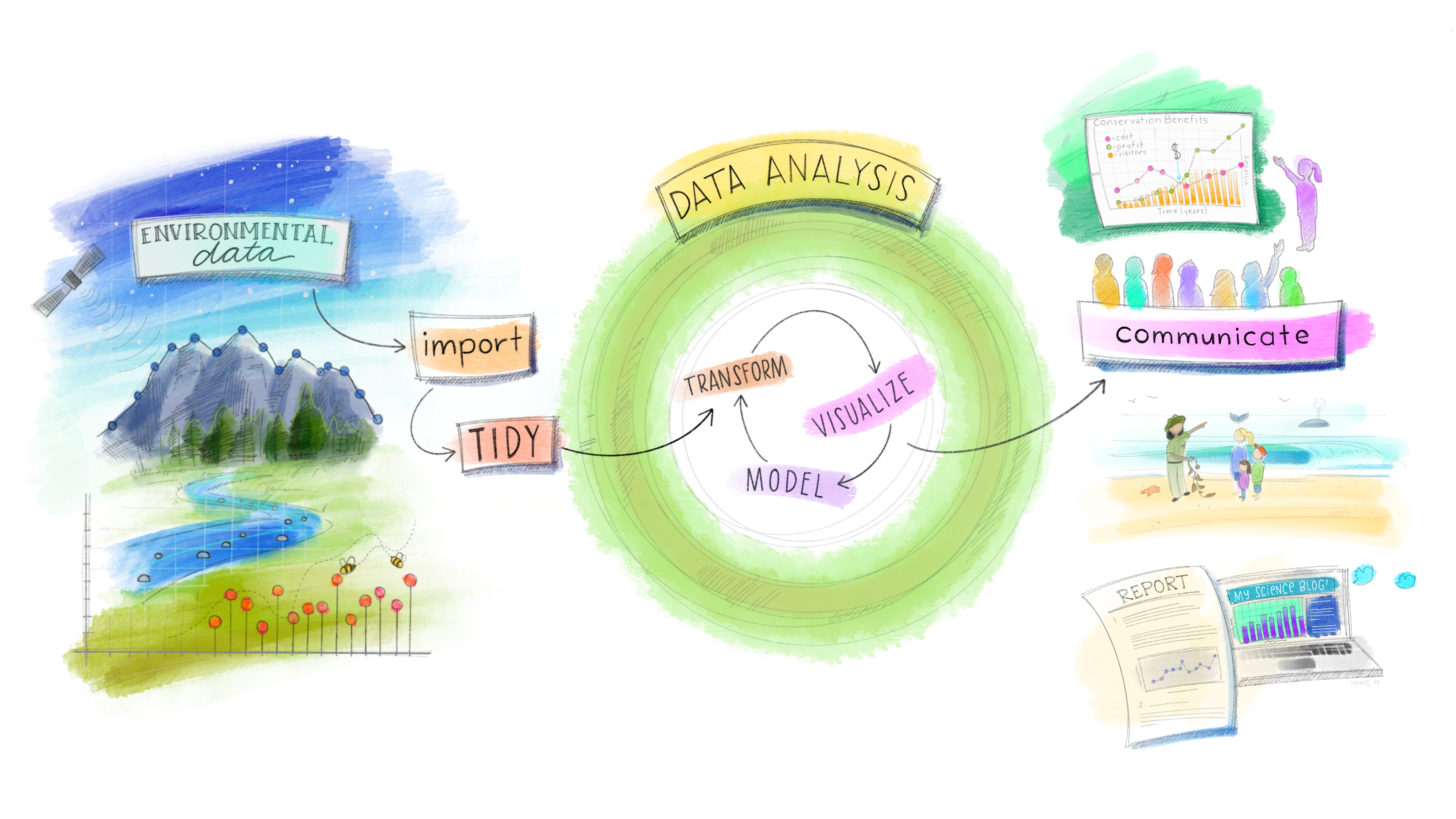

Example Workflow

Fig ref: Updated from Grolemund & Wickham’s classis R4DS schematic, envisioned by Dr. Julia Lowndes for her 2019 useR! keynote talk and illustrated by Allison Horst.

Generating a reproducible workflows

Reproducibility is the ability for any researcher to take the same data set and run the same set of software program instructions as another researcher and achieve the same results.

Not the same as replicability where you re-run an experiment and achieve the same outcomes.

The goal is to create an exact record of what was done to a data set to produce a specific result.

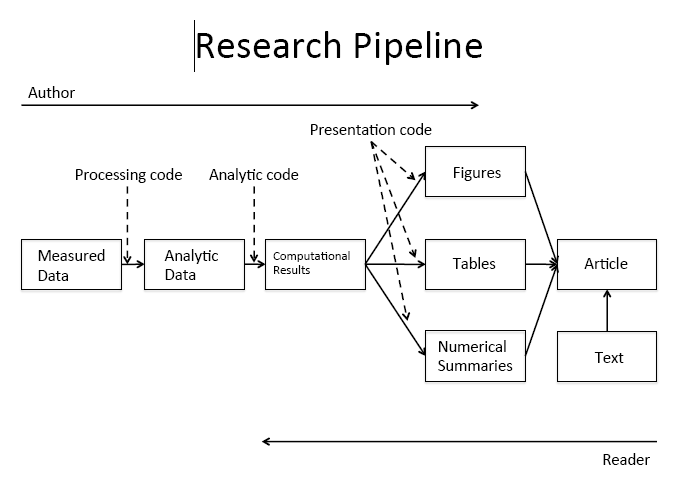

Three steps to achieve reproducibility

- The un-processed data are connected directly to software code file(s) that perform data preparation techniques.

- The processed data are connected directly to other software code file(s) that perform the analyses.

- All data and code files are self-contained such that they could be given to another researcher to execute the code commands on a separate computer and achieve the same results as the original author.

Figure Credits: Roger Peng

Literate programming

- Explain the logic of the program or analysis process in a natural language,

- Small code snippets included at each step act as a full set of instructions that can be executed to reproduce the result/analysis being discussed.

- Literate programming tools such as Markdown and \(\LaTeX\) are integrated into all common statistical packages except SPSS.

Reproducible Research + Literate Programming

- Practicing reproducible research techniques using literate programming tools allows such major updates to be a simple matter of re-compiling all coded instructions using the updated data set.

- The effort then is reduced to a careful review and update of any written results.

- Using literate programming tools create formatted documents in a streamlined manner that is fully synchronized with the code itself.

- The author writes the text explanations, interpretations, and code in the statistical software program itself, and the program will execute all commands and combine the text, code and output all together into a final dynamic document.

Why all the fuss?

- You are your own collaborator 6 months from now. Be nice to your future self

- Explain your steps (the WHY more than the what)

- How did you get from point A to B?

- Why did you recode this variable in this manner?

- Found an error in your analysis code? Need to add an analysis to your presentation?

- Reproduce your steps in a few clicks using a script file (

.R,.Rmd,.sas,.sps,.do,.ipynb)

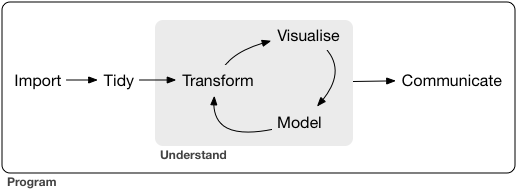

Data Analysis Pipeline

In this model of the data science process, you start with data import and tidying. Next, you understand your data with an iterative cycle of transforming, visualizing, and modeling. You finish the process by communicating your results to other humans. Ref R for Data Science 2nd ed

Regardless of the programming language you choose to use, using scripts will make this process reproducible and more powerful with less pain points.

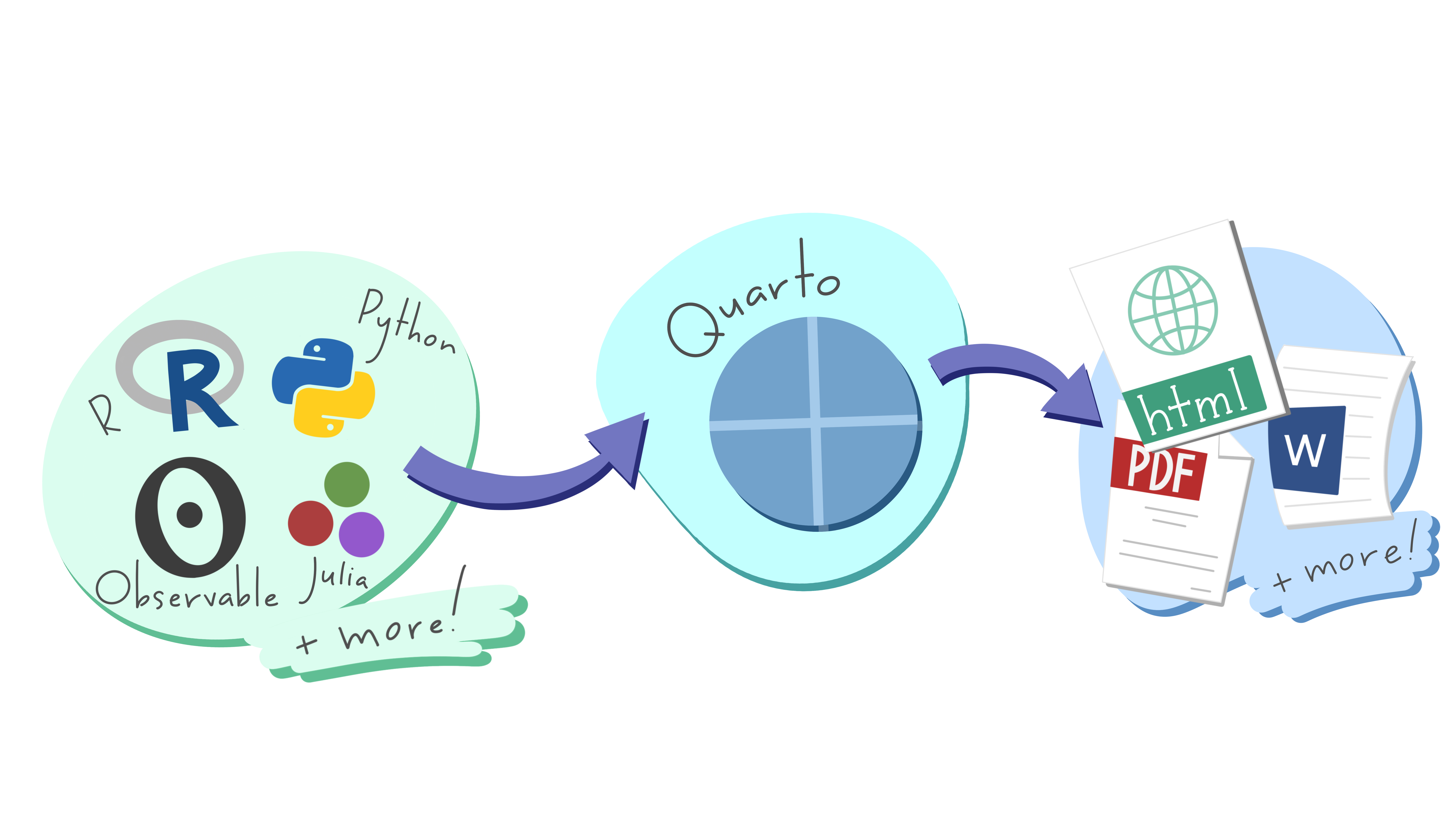

Quarto as an end-to-end solution

- “Next Generation” R Markdown

- Publish reproducible, production quality articles, presentations, dashboards, websites, blogs, and books in HTML, PDF, MS Word, ePub, and more.

- Write using Pandoc markdown, including equations, citations, crossrefs, figure panels, callouts, advanced layout, and more.

Using R Projects

- R Projects are a self contained workspace for you to keep all files and data related to a project.

- Uses relative paths, enabling easier collaboration

- Follow this walk through from R Studio

Required

Using R Projects is a required part of this class. Spend a few minutes turning your Math 615 folder into an R project now.